Reading PDF files with python

The project is hosted on github: check it out!

Github linkIntroduction

Analyzing financial data is a common use case to begin your data analytics career: you have a use case (analyzing your personal finances) and accessible data that you are familiar with (through your bank). You also have ideas of analysis to run (where is my money going, is my wealth increasing, etc..)

While many banks offer the possibility to extract your data in a structured format (excel, csv), it sometimes comes with limitations.

My previous bank did not allow me to download all of my historical data as csv files.

The only data I had were my monthly statements, available as PDF files.

With Python and the right packages, it is possible to extract data from PDF files and then analyze it.

Approach

1 - Testing Camelot: "PDF table extraction for Humans"

First step was to experiment parsing data from a single PDF file and page, with a python library, camelot.

I tested a few things and, after some trial and error, finally found how I needed to set my parameters to read a page properly.

Check out the library's website for your own requirements: it is very well documented!

def read_first_page(pdf_file, table_areas_first_page, column_positions):

# output will be a table list and first page will have index 0

table = camelot.read_pdf(

filepath = pdf_file,

pages = '1',

encoding = 'utf-8',

# stream to parse PDFs that have whitespaces between celles to simulate a table structure

flavor = 'stream'

# to detect the table, list with positions expressed in points

table_areas = table_areas_first_page,

# to detect columns, list with positions expressed in points

columns = column_positions,

)

# table to dataframe

first_page_df = table[0].df

# indicating page number in new column

first_page_df.loc[:, 'file_page'] = 1

return first_page_df

2 - Finding patterns for automation

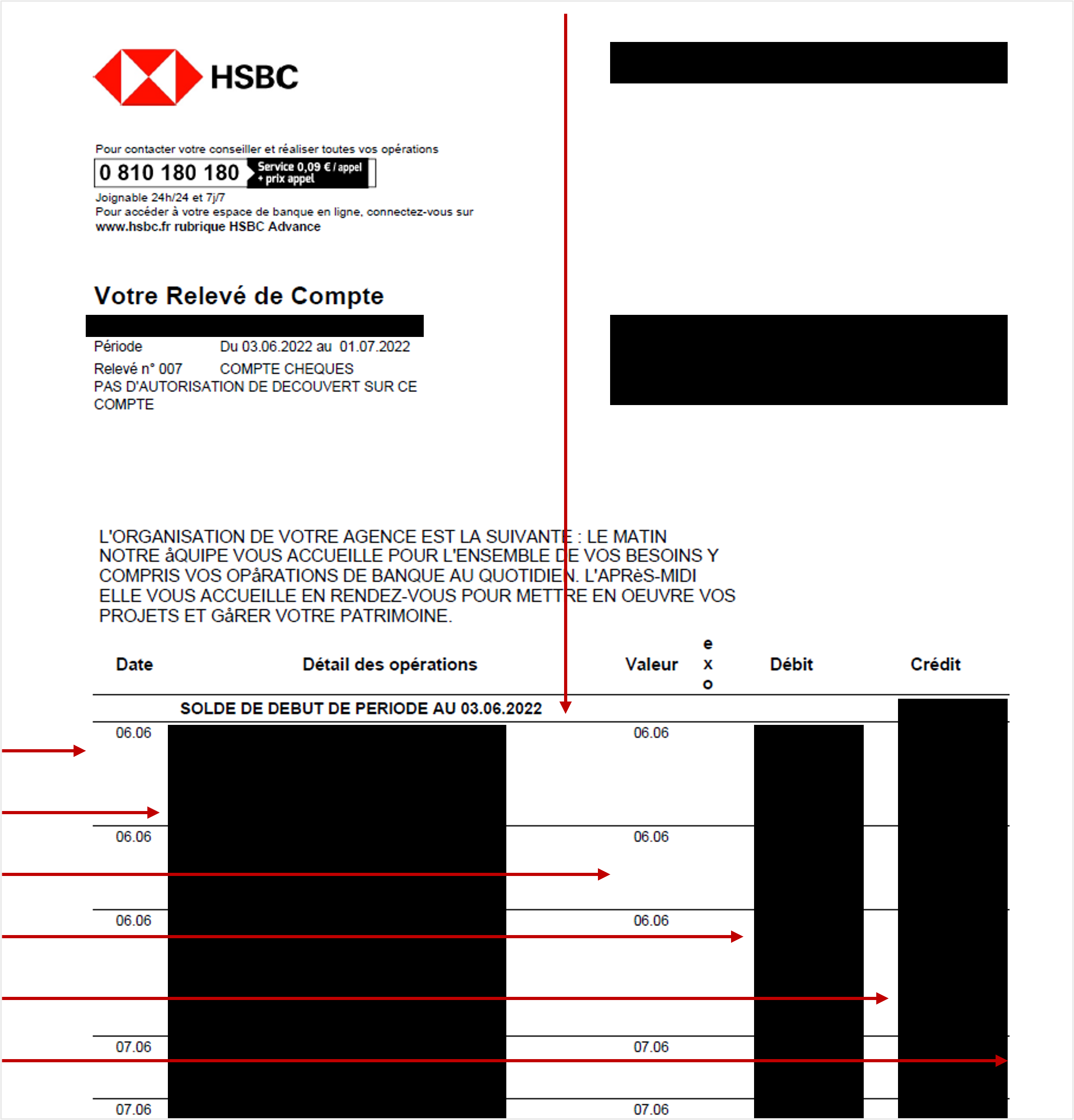

Second step was to analyze how the data was structured in my PDF files. For this, I identified where the data that I needed was in a bank statement.

Then, I analyzed a few more bank statements, to check whether there was a pattern or not.

Between the first page, the middle pages and the last pages, there were some slight differences. The table with data would begin or end at a higher or lower position (in pixels).

In the end, I needed to have 3 distinct treatments, to parse my PDF files into a single, clean csv file.

for key, value in dict.items():

file_path = value[0]

extraction_date = value[1]

account_number = value[2]

number_of_pages = value[3]

# reading first page data

first_page_df = fn.read_first_page(

file_path,

cfg.table_areas_first_page,

cfg.column_positions

)

# reading "inbetween" pages data

inbetween_pages_df = fn.read_inbetween_pages(

file_path,

cfg.table_areas_inbetween_pages,

cfg.column_positions,

number_of_pages

)

# reading last page data

last_page_df = fn.read_last_page(

file_path,

cfg.table_areas_last_page,

cfg.column_positions,

number_of_pages

)

# creating a list of dataframes

all_pages_dfs = [first_page_df, inbetween_pages_df, last_page_df]

# cleaning each dataframe in the list

# and concatenating these into a single one

all_pages_cleaned_df = pd.concat(

[fn.clean_dataframe(df) for df in all_pages_dfs],

ignore_index=True

)

3 - Defining table areas in the PDF files

Third step was to define data areas for each required treatment (1st page, middle pages, last page).

This meant taking actual measures, in pixels: where the table would start and end, on which position would a column start and end, etc..

This was a rather tedious task, especially because I had to find how to do that in the first place.

Adobe Acrobat or Foxit Reader contain such features.

4 - Testing and validating the results

Fourth step, I had to build my script and test it at a bigger scale as I had hundreds of statements. I took a few sample statements that I tested thoroughly, ultimately ensuring that the parsed data aligned with the source data. I ensured I had the expected number of transactions, that the dates and amounts were right.

Conclusion

Camelot is a great python library to parse data out of PDF files. There are many features, that allow you to get the required result and integrate it within a larger project, e.g. processing and analyzing your bank statements.